- #Tutorial mac os x rabbitmq 2017 install#

- #Tutorial mac os x rabbitmq 2017 software#

- #Tutorial mac os x rabbitmq 2017 download#

#Tutorial mac os x rabbitmq 2017 software#

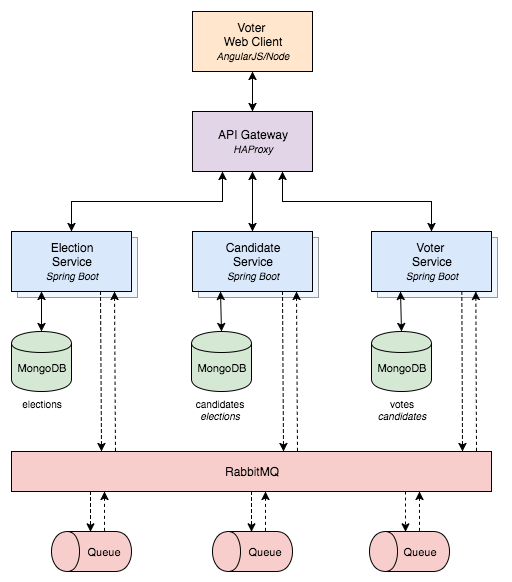

Message brokers allow different software systems–often using different programming languages, and on different platforms–to communicate and exchange information. Amazon SageMaker also includes built-in A/B testing capabilities to help you test your model and experiment with different versions to achieve the best results.Īmazon SageMaker takes away the heavy lifting of machine learning, so you can build, train, and deploy machine learning models quickly and easily.Īmazon MQ is a managed message broker service for Apache ActiveMQ that makes it easy to set up and operate message brokers in the cloud. Amazon SageMaker deploys your model on an auto-scaling cluster of Amazon EC2 instances that are spread across multiple availability zones to deliver both high performance and high availability. Once your model is trained and tuned, Amazon SageMaker makes it easy to deploy in production so you can start running generating predictions on new data (a process called inference). To make the training process even faster and easier, AmazonSageMaker can automatically tune your model to achieve the highest possible accuracy. Amazon SageMaker manages all of the underlying infrastructure for you and can easily scale to train models at petabyte scale. You can begin training your model with a single click in the Amazon SageMaker console. You also have the option of using your own framework.

Amazon SageMaker also comes pre-configured to run TensorFlow and Apache MXNet, two of the most popular open source frameworks. To help you select your algorithm, Amazon SageMaker includes the 10 most common machine learning algorithms which have been pre-installed and optimized to deliver up to 10 times the performance you’ll find running these algorithms anywhere else. You can connect directly to data in S3, or use AWS Glue to move data from Amazon RDS, Amazon DynamoDB, and Amazon Redshift into S3 for analysis in your notebook. Amazon SageMaker includes hosted Jupyter notebooks that make it is easy to explore and visualize your training data stored in Amazon S3. Amazon SageMaker includes modules that can be used together or independently to build, train, and deploy your machine learning models.Īmazon SageMaker makes it easy to build ML models and get them ready for training by providing everything you need to quickly connect to your training data, and to select and optimize the best algorithm and framework for your application. To use these features, please sign up for the preview.Īmazon SageMaker is a fully-managed service that enables data scientists and developers to quickly and easily build, train, and deploy machine learning models at any scale. To let your application access the hardware resources on your device, you declare them as a local resource in your AWS Greengrass group in the AWS Greengrass console.

In many applications, your ML model will perform better when you fully utilize all the hardware resources available on the device, and AWS Greengrass ML Inference helps with this. The provided Lambda blueprint shows you common tasks such as loading models, importing Apache MXNet, and taking actions based on predictions. The pre-built Apache MXNet package for NVIDIA Jetson, Intel Apollo Lake, and Raspberry Pi devices can be downloaded directly from the cloud or can be included as part of the software in your AWS Greengrass group.ĪWS Greengrass ML Inference also includes prebuilt AWS Lambda templates that you use to create an inference app quickly.

#Tutorial mac os x rabbitmq 2017 install#

AWS Greengrass ML Inference includes a prebuilt Apache MXNet framework to install on AWS Greengrass devices so you don't have to create this from scratch.

#Tutorial mac os x rabbitmq 2017 download#

For example, you can access a deep learning model built and trained in Amazon SageMaker directly from the AWS Greengrass console and then download it to your device as part of an AWS Greengrass group. The capability simplifies each step of deploying ML, including accessing ML models, deploying models to devices, building and deploying ML frameworks, creating inference apps, and utilizing on device accelerators such as GPUs and FPGAs. With AWS Greengrass ML Inference your AWS Greengrass devices can make smart decisions quickly as data is being generated, even when they are disconnected. Training ML models requires massive computing resources so it is a natural fit for the cloud. Until now, building and training ML models and running ML inference was done almost exclusively in the cloud. AWS Greengrass Machine Learning (ML) Inference makes it easy to perform ML inference locally on AWS Greengrass devices using models that are built and trained in the cloud.

0 kommentar(er)

0 kommentar(er)